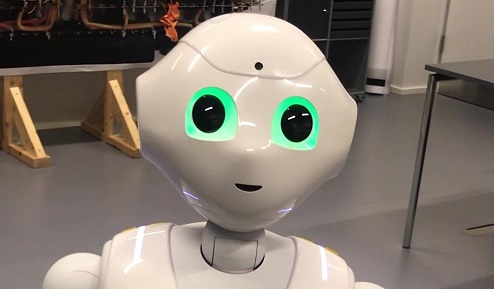

Meet Pepper

A lot of discussion has been going in the last years about humanoid robots, artificial intelligence and machine learning. There are already some companies that have created and developed different robot prototypes, which can simulate human behaviour and provide a number of services from simple movement and dialogs to more complex services that includes machine learning and AI.

In order to investigate this new trend at Digi Labs, we have acquired a humanoid robot from SoftBankRobotics, Pepper, the new member of Move Lab, has the ability of speech recognition, visual recognition, and furthermore he can also recognise basic human emotions.

Pepper is equipped with different sensors and cameras such as infrared sensors, bumpers, an inertial unit, 2D and 3D cameras, and sonars for omnidirectional and autonomous navigation. Pepper also possess touch sensors and microphones in order to create a multimodal human-robotic interaction.

But most importantly, Pepper has a build in speech recognition module that allows him to communicate with humans in 15 different languages. Another significant feature about Pepper is the IDE (Integrated Development Environment) for Python programming. The robots’ SDK gives access to the complete set of features of Pepper, that can be used to build your own application.

Moreover, for the not so advanced user, Pepper comes with Choregraphe - a platform where users can design the flow of actions and commands in order to interact with Pepper just by selecting different behaviours and combing them with dialogs.

We believe that Pepper can be an added value to our labs for research, educational purposes, and to better understand human-robot interaction.